Part One:

How do movements couple to sounds in the natural environment, and can paired dance communication by improvised both in the movements and musical composition realms? I used SonicPi to generatively sample sound recorded from nature to make musical beats and rhythms. These beats will couple to pair dance metaphors in paradigms in salsa and zouk, which are popular dances in Panama. Specifically the project consists of the following phases.

https://www.dinacon.org/wp-content/uploads/2019/10/Ray_GenerativeMusicDinacon2019.txt

1. Record sounds in the natural environment of Panama and use them to construct simple phrases in SonicPi, choosing the right envelopes to synthesize beat sounds which, when live-looped together, produces Latin-like rhythms.

2. Begin recruiting conference attendees for a performance which involves dancing in sync to the collected beats. I will train those who are not familiar with simple steps of salsa and bachata latin dancing so that all can practice together even without formal training.

3. We will construct a wearable interface for switching between different SoniPi sketches for generating different sounds. We will prototype a teensy-based device that can use accelerometer data to switch between beats. The choice will depend on the leader in the dance pair.

4. We will user test a pair of dancers, one of whom (leader) can switch between rhythms and music that inspires different dance forms and speeds. The leader can choose both her steps and the musical rhythms being generated. For example, she can choose to dance bachata rather than salsa, or to have a dip in the salsa, and can choose the musical motifs appropriate to these specific actions.

5. If time permits, we will organize a Casino Rueda performance using pairs of dancers who can all control the music in different ways. If the technology does not permit it, we can prototype the process using calls much like in Casino Rueda, giving our DJ a cue to change the music.

The project investigates whether improvisation in dance can be coupled also to improvisation in music. Can we create a system for both changing the musicality and the movements in dance? We aim to investigate this in a natural context where Latin rhythms and natural sounds can be used as samples to create a performance of higher order improvisation.

Part Two:

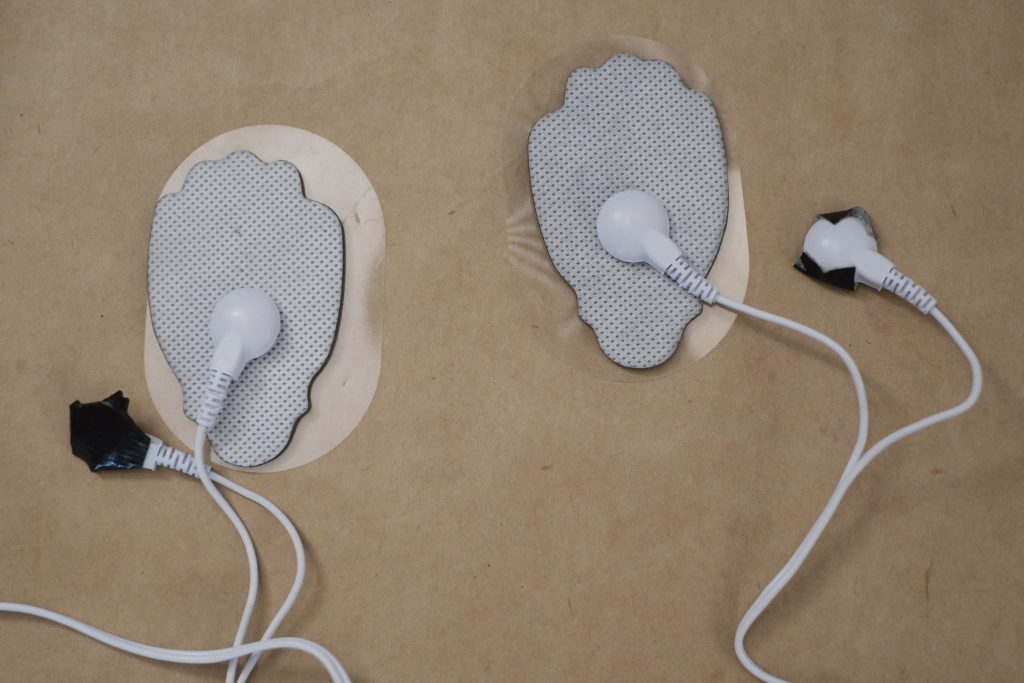

Can EEG be used as a source of sound and can this sound be used to harmonize with the environment? This project generates a work of symphonic sound using human EEG attention data and EEG data in the wild. I use a MindWave Mobile headset to get attention data from humans and translates that scale to pitch for the melody. I use plant electrical data to recorded using plant electrodes (thanks to Seamus) to generate the tonic portion for the work. Combining the phasic EEG music with the tonic plant environmental music gives a voice to the way we operate in the universe. We humans make a lot of phasic noise, but the plant and environment of the world embody the tone and mood that form the substance of a work. We co-create with electrical recordings from the brain and the plant to make a symphony of Gamboa.

MindWave Mobile data is piped to BrainWaveOSC app, which sends the data to Unity. Unity uses an AudioSource to generate the pitch as mapped from attention data. On the plant side, Arduino is used to record and log plant electrical values. These two sources of EEG are part of the environment we exist in. Human EEG as you can see in the video demo, is used to generate pitch, making directed musical phrases using attention, so humans can control to come extent (but not all). Plant EEG will be used to generate the subtext of the symphony, forming the chords that the human EEG will play on top of. Both have a life of its own, so that the final form of the work is as much part of the environment of Gamboa as to any conscious control by any party.

Ray LC’s artistic practice incorporates cutting-edge neuroscience research for building bonds between humans and between humans and machines. He studied AI (Cal) and neuroscience (UCLA), building interactive art in Tokyo while publishing papers on PTSD. He’s Visiting Professor, Northeastern University College of Art, Media, Design. He was artist-in-residence at BankArt, 1_Wall_Tokyo, Brooklyn Fashion BFDA, Process Space LMCC, NYSCI, Saari Residence. He exhibited at Kiyoshi Saito Museum, Tokyo GoldenEgg, Columbia University Macy Gallery, Java Studios, CUHK, Elektra, NYSCI, Happieee Place ArtLab. He was awarded by Japan JSPS, National Science Foundation, National Institute of Health, Microsoft Imagine Cup, Adobe Design Achievement Award. http://www.raylc.org/